In this chapter

- Jensen’s inequality

- how to examine time-series data to model returns

- the Wiener process, a mathematical model of randomness

- a simple model for equities, currencies, commodities and indices

The popular forms of ‘analysis’

Three form of ‘analysis’ commonly used in the financial world:

- Fundamental

- Technical

- Quantitative

Fundamental analysis is all about trying to determine the ‘correct’ worth of a company by an in-depth study of balance sheets, management teams, patent applications, competitors, lawsuit, etc.

Two difficulties

- very very hard

- Even if you have the perfect model for the value of a firm, it doesn’t mean you can make money.

Technical analysis is when you don’t care anything about the company other than the information contained within its stock price history.

Quantitative analysis is all about treating financial quantities such as stock prices or interest rates as random, and then choosing the best models for the randomness.

- forming a solid foundation for portfolio theory, derivatives pricing, risk management

Why we need a model for randomness: Jensen’s inequality

where

Similarities between equities, currencies, commodities and indices

Examining returns

The mean of the returns distribution is

and the sample standard deviation is

Supposing that we believe that the empirical returns are close enough to normal for this to be a good approximation.

Timescales

Call the time step

- In the absence of any randomness the asset exhibits exponential growth, just like cash in the bank.

- The model is meaningful in the limit as the time step tends to zero. If I had chosen to scale the mean of the returns distribution with any other power of

where

Putting these scaling explicitly into out asset return model

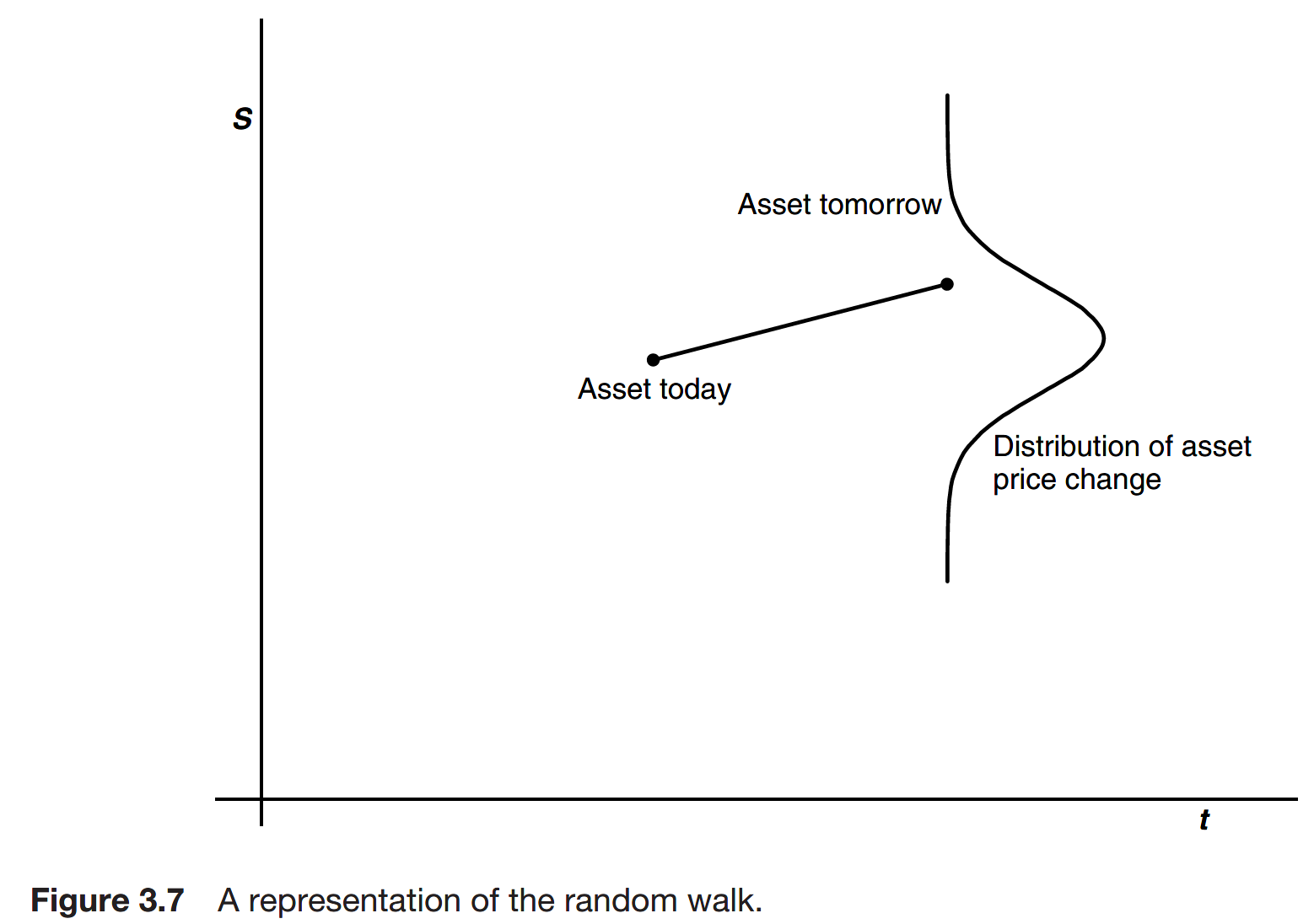

This equation as a model for a random work of the asset price.

The parameter

The volatility

The parameter

The volatility is the most important and elusive quantity in the theory of derivatives.

Because of their scaling with time, the drift and volatility have different effects on the asset path. The drift is not apparent over short timescales for which the volatility dominates. Over long timescales, for instance decades, the drift becomes important.

Estimating volatility

If

can also be used, where

It is highly unlikely that volatility is constant in time. If you want to know the volatility today you must use some past data in the calculation. Unfortunately, this means that there is no guarantee that you are actually calculating today’s volatility.

Since all returns are equally weighted, while they are in the estimate of volatility, any large return will stay in the estimate of volatility until the 10 (or 30 or 100) days have passed. This gives rise to a plateauing of volatility, and is totally spurious.

Since volatility is not directly observable, and because of the plateauing effect in the simple measure of volatility, you might want to use other volatility estimates.

The random walk on a spreadsheet

The Winer process

You can think of dX as being a random variable, drawn from a normal distribution with mean zero and variance dt:

Using Wiener process instead of normal distributions and discrete time, the important point is that we can build up a continuous-time theory.

The widely accepted model for equities, currencies, commodities and indices

Stochastic differential equation is a continuous-time model for an asset price. It is the most widely accepted model.

Further reading